Abstract

INTRODUCTION

This study examines the relationship between the clinical performance of medical students and their performance as doctors during their internships.

METHODS

This retrospective study involved 63 applicants of a residency programme conducted at Chonnam National University Hospital, South Korea, in November 2012. We compared the performance of the applicants during their internship with their clinical performance during their fourth year of medical school. The performance of the applicants as interns was periodically evaluated by the faculty of each department, while their clinical performance as fourth-year medical students was assessed using the Clinical Performance Examination (CPX) and the Objective Structured Clinical Examination (OSCE).

RESULTS

The performance of the applicants as interns was positively correlated with their clinical performance as fourth-year medical students, as measured by the CPX and OSCE. The performance of the applicants as interns was moderately correlated with the patient-physician interaction items addressing communication and interpersonal skills in the CPX.

CONCLUSION

The clinical performance of medical students during their fourth year in medical school was related to their performance as medical interns. Medical students should be trained to develop good clinical skills through actual encounters with patients or simulated encounters using manikins, to enable them to become more competent doctors.

INTRODUCTION

It is crucial that medical students acquire appropriate clinical skills. These skills include the ability to gather and interpret information provided by patients, as well as the ability to formulate treatment plans based on medical knowledge obtained in preclinical/clinical courses and interactions with patients during their clinical clerkship. The clinical performance of medical students is expected to affect their future performance as doctors.

In 1975, Harden et al(1) introduced a structured clinical examination that assessed the performance of medical students based on factors such as their skills, attitudes, problem-solving abilities and factual knowledge. The implementation of clinical skills examinations had a positive effect on the clinical performance of medical graduates. Medical graduates who passed a clinical skills examination were less likely to be judged as deficient when compared to those who had not taken the examination.(2,3)

The relationship between the performance of medical students in clinical skills examinations and their actual performance as doctors (as rated by supervising directors) has yet to be definitively established. In one study, the clinical performance of students was found to be positively associated with their supervising consultants’ assessment during their preregistration house officer year; however, this result did not reach the conventional levels of statistical significance.(4) In a different study, although statistical significance was also not shown, the score among all the parameters of the students’ performance was found to be more correlated with the students’ performance as first-year residents.(5) On the other hand, one study showed that only the interpersonal score from the prototype examination correlated with the students’ future performance as interns.(6)

The Clinical Performance Examination (CPX) assesses communication skills, professional attributes, clinical skills and knowledge in realistic clinical encounters,(7) while the Objective Structured Clinical Examination (OSCE) measures technical skills. Thus, we hypothesised that the performance of a student in the CPX and OSCE could affect his or her performance as a doctor. The present study aimed to investigate whether the clinical performance of medical students, as measured by the CPX and OSCE, would be able to predict their performance as doctors in a clinical setting. This study also aimed to identify which component(s) of the students’ performance in the CPX and OSCE was dominant in the relationship with their actual performance as doctors.

METHODS

There were 90 applicants for the residency programme conducted at Chonnam National University Hospital (CNUH), South Korea, in November 2012. Among these 90 applicants, 63 (70.0%) applicants who graduated from Chonnam National University Medical School (CNUMS), South Korea (affiliated with CNUH), and whose student clinical performance data was available participated in the study. All 63 participants had been trained or had completed a one-year internship at CNUH. The Institutional Review Board of the CNUH exempted the study from the regulations governing research involving human subjects. All participants, who were informed that their records were confidential, gave their written consent.

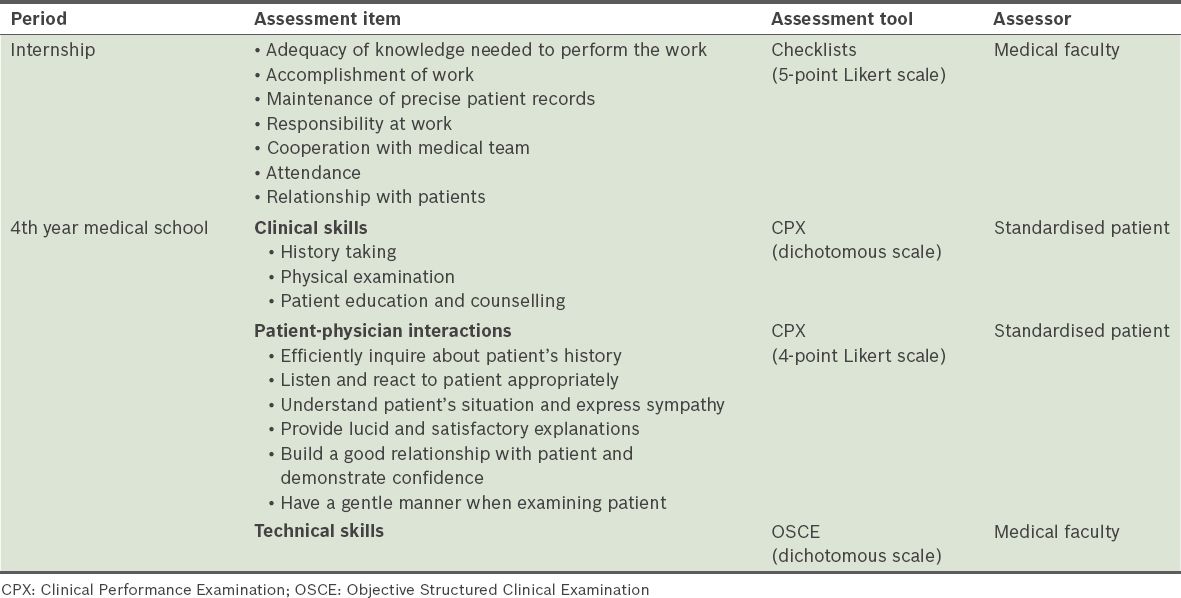

In CNUH, medical interns are trained at each clinical department for two weeks during their one-year medical internship. This includes training in compulsory departments such as internal medicine, surgery, paediatrics, and obstetrics and gynaecology. The medical faculty at each department evaluated the interns’ performance based on the following assessment items: adequacy of knowledge needed to perform the work; accomplishment of work; maintenance of precise patient records; responsibility at work; cooperation with the medical team; attendance; and relationship with patients (

Table I

Description of the assessment items used to measure the performance of the intern/medical student.

In the fourth year of medical school, the clinical performance of the medical students was measured using the CPX and OSCE (

In CNUMS, 12 testing stations are set up for the CPX and OSCE assessments; 12 students enter each of these stations individually to complete the examinations in one of two orders: CPX-OSCE-CPX or OSCE-CPX-OSCE. The CPX score, which totals a maximum of 100 points, reflects the clinical skills (history-taking, physical examination, patient education and patient counselling make up 75% of the score) and patient-physician interactions (this category makes up the remaining 25% of the score) of the medical student. Using a case-specific checklist, one trained standardised patient rated the medical student’s clinical skills outside the view of the medical student, while another standardised patient evaluated the patient-physician interactions that had taken place using a 4-point Likert scale (1 = poor, 4 = excellent) in the presence of the medical student. The items used to assess patient-physician interactions (i.e. ‘efficiently inquired about my history’, ‘listened and reacted to me appropriately’, ‘understood my situation and expressed sympathy’, ‘provided lucid and satisfactory explanations’, ‘built a good relationship with me and demonstrated confidence’, and ‘had a gentle manner when examining me’) are the same in all CPX examinations. The Jeolla Consortium, which is organised by four medical schools (including CNUMS), developed the clinical cases and has trained the standardised patients since 2002. The total OSCE score is 50 points. The medical faculty assessed the medical students’ technical skills at each station using a procedure-specific checklist. The CPX (clinical skills and patient-physician interactions) and OSCE (technical skills) scores of the medical students were provided in the form of an average score for each assessment item (i.e. the average of the scores obtained at the six clinical stations for each assessment item).

All analyses were performed using PASW Statistics version 18.0 (SPSS Inc, Chicago, IL, USA). Pearson’s correlation analyses were used to investigate the relationships between the participants’ performance as interns (using the scores obtained from the medical faculty evaluation) and the participants’ performance as medical students (using the CPX and OSCE scores).

RESULTS

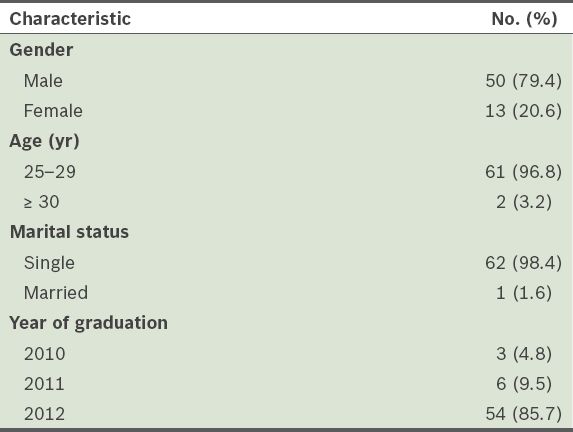

Of the 63 participants, 50 (79.4%) were male and 13 (20.6%) were female (

Table II

General characteristics of the study participants (n = 63).

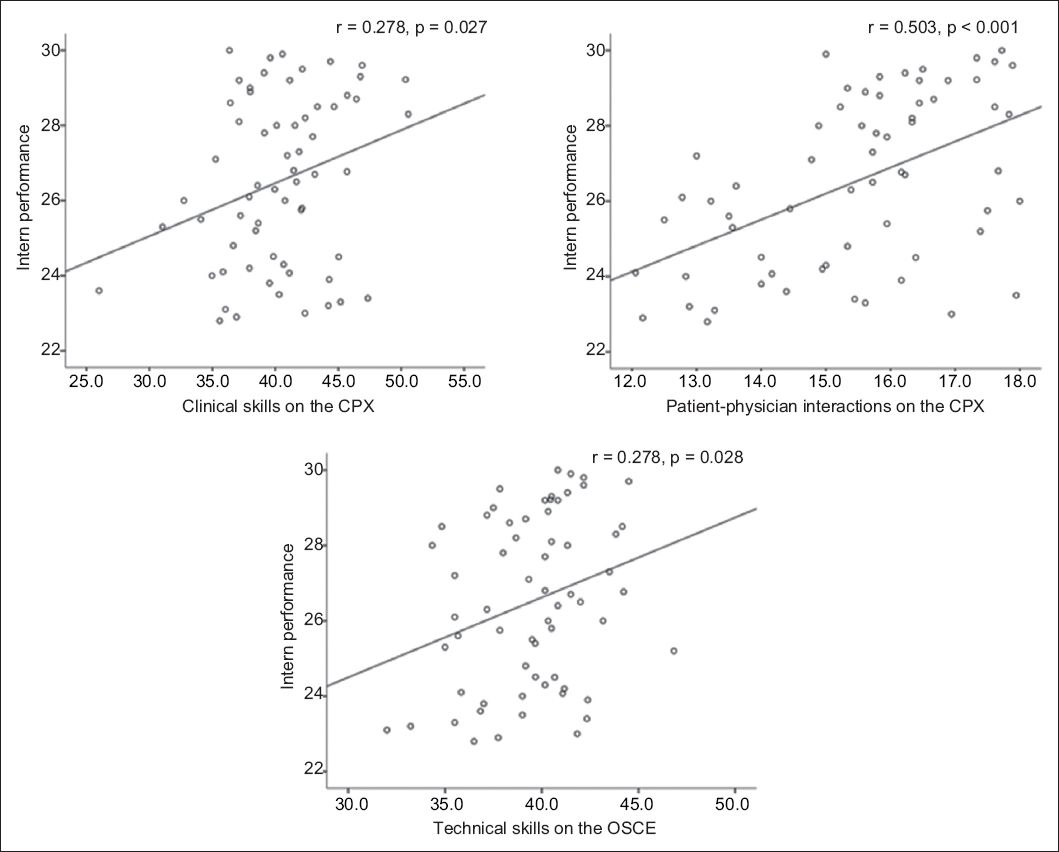

The average performance score of the participants when they were interns was 26.5 ± 2.3. The average performance scores of the participants when they were medical students were 40.4 ± 4.4 for clinical skills and 15.5 ± 1.6 for patient-physician interactions (as measured by the CPX) and 39.5 ± 2.9 for technical skills (as measured by the OSCE). We found positive correlations between the performance of the participants as interns and their clinical performance as medical students, as measured by the CPX and OSCE. The performance of the participants as interns was positively correlated with their performance as medical students, in terms of their clinical skills (r = 0.278, p = 0.027) and patient-physician interactions (r = 0.503, p < 0.001), and their technical skills (r = 0.278, p = 0.028) (

Fig. 1

Scatter plots show the correlations between the performance of the participants as interns and their performance as medical students. CPX: Clinical Performance Examination; OSCE: Objective Structured Clinical Examination

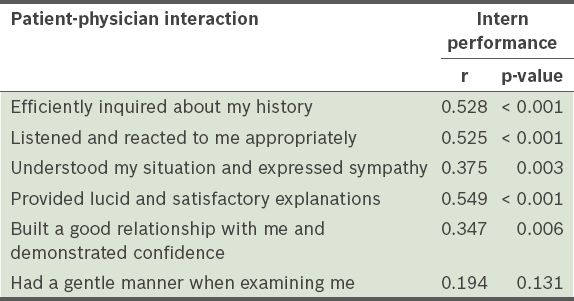

The performance of the participants as interns correlated with all items in the patient-physician interactions category of the CPX, except for one item that assessed the performance of the student in carrying out a physical examination (i.e. ‘had a gentle manner when examining me’) (

Table III

Correlations between the performance of the participants as interns and their patient-physician interactions as medical students.

DISCUSSION

In the present study, we found that the performance of the interns positively correlated with their performance as fourth-year medical students, as measured by the clinical skills and patient-physician interaction items of the CPX, and the technical skills item of the OSCE. Additionally, we found that the medical students’ communication and interpersonal skills were closely related to their performance during internship.

Studies have shown that the performance of medical students in clinical skills examinations can predict their subsequent performance during internship and the first year of residency.(4-6) In one study, interns’ results in clinical skills examinations were shown to be related to the medical faculty’s evaluations of their actual performance in a clinical setting and the results of their written examinations (testing medical knowledge) taken during the same periods.(8) In the present study, we also demonstrated a positive relationship between medical students’ clinical performance during their fourth year in medical school and their future performance (during internship). In particular, the medical students’ performance in interpersonal skills was correlated with their performance as interns. In contrast with a previous study, which showed that interpersonal scores (including those of language proficiency tests) were positively related with future performance,(6) our results indicate that intrinsic communication and interpersonal skills were related to subsequent clinical performance without any intervention of language differences.

Interestingly, we found that the clinical and technical skills addressed by the CPX and OSCE were more weakly correlated with intern performance than patient-physician interactions on the CPX. There may be several explanations for these findings. First, the medical faculty of each department rated the interns’ job performance in terms of the completeness and accuracy of their work as a whole, whereas the CPX and OSCE relied on case-specific standardised checklists. Although ward evaluations were sufficiently reliable reflections of the faculty’s view of each intern, the faculty members offered global, undifferentiated judgements that did not identify specific deficiencies in performance skills.(8,9) Second, this finding may also relate to the time lapse between the two measures; the study’s sample size was not large enough to statistically adjust for the effects of time and interventions such as feedback and remediation.(10) In other words, the clinical and technical skills of the medical students may have improved more than their interpersonal skills before the end of their undergraduate training, as they would have spent time and effort preparing for the clinical skills examination of the National Medical Licensing Examination, administered at the end of their undergraduate training. Repeated practice could narrow the gaps between the students’ performance in clinical and technical skills before and after graduation, whereas their performance in patient-physician interaction might have remained unchanged or underwent little change because of the lack of emphasis on this area.(11,12) Therefore, the correlations between the performance of the interns and their performance as medical students in terms of clinical and technical skills were not as strong as expected. Finally, the medical faculty may have placed a high value on communication and interpersonal skills in their overall assessments of the interns;(6) thus, these parameters may be the most valid indices of the ward evaluation.(8)

The present study is not without limitations. The first limitation is its small sample size and the involvement of only one institution. In other words, the study’s findings regarding the relationship between the clinical performance of the medical students and their performance as interns cannot be generalised. In addition, the performance of the interns in actual clinical settings was not assessed via a standardised examination. Two weeks at a single department might be an insufficient duration to evaluate the performance of the interns on all the assessment scales; in the present study, however, the average of the sum of the two-week assessment scores was used for assessment. Furthermore, faculty members assessed the interpersonal skills of the interns in their capacity as supervising directors, but it would be better if a standardised patient made the assessment. Another limitation of the study is that we could not demonstrate the relationship between each subcomponent of the interns’ performance with that of their performance as medical students, since only the overall performance scores of the participants as interns were provided. The brevity of the period used for comparing the participants’ performance as students with their performance as physicians is also a limitation. It will be important to ascertain whether the close relationship between the performance of medical students in medical school and their performance after graduation (i.e. when they become medical interns, and later on, physicians) persists in the long term. Finally, this study is limited by its retrospective design; all participants had already passed the National Medical Licensing Examination and achieved the minimum level of clinical proficiency prior to the start of their internship. Thus, prospective studies should be conducted to minimise selection bias and the influence of confounding factors.

In conclusion, the medical students’ performance in the CPX and OSCE correlated positively with their performance as interns. The interpersonal scores of medical students, as assessed by the CPX, were closely correlated to the students’ future performance as interns. We suggest that emphasis be placed on educational programmes that are designed to improve communication and interpersonal skills, clinical reasoning skills and technical skills.

ACKNOWLEDGEMENTS

The authors thank Prof Myung-Ho Jeong and Mr Sam-Hee Kim from the Office of Education and Research at Chonnam National University Hospital, South Korea, for their help in the study’s data collection.