Abstract

INTRODUCTION

Metacognition is a cognitive debiasing strategy that clinicians can use to deliberately detach themselves from the immediate context of a clinical decision, which allows them to reflect upon the thinking process. However, cognitive debiasing strategies are often most needed when the clinician cannot afford the time to use them. A mnemonic checklist known as TWED (T = threat, W = what else, E = evidence and D = dispositional factors) was recently created to facilitate metacognition. This study explores the hypothesis that the TWED checklist improves the ability of medical students to make better clinical decisions.

METHODS

Two groups of final-year medical students from Universiti Sains Malaysia, Malaysia, were recruited to participate in this quasi-experimental study. The intervention group (n = 21) received educational intervention that introduced the TWED checklist, while the control group (n = 19) received a tutorial on basic electrocardiography. Post-intervention, both groups received a similar assessment on clinical decision-making based on five case scenarios.

RESULTS

The mean score of the intervention group was significantly higher than that of the control group (18.50 ± 4.45 marks vs. 12.50 ± 2.84 marks, p < 0.001). In three of the five case scenarios, students in the intervention group obtained higher scores than those in the control group.

CONCLUSION

The results of this study support the use of the TWED checklist to facilitate metacognition in clinical decision-making.

INTRODUCTION

According to the dual process theory, there are two types of decision-making – Types 1 and 2.(1-5) The defining feature of Type 1 is automaticity, which facilitates fast decision-making independent of higher-level control.(4,5) The defining feature of Type 2 is cognitive decoupling, which involves the analytical ability to compare and contrast alternatives using imagination before making a decision.(4,5)

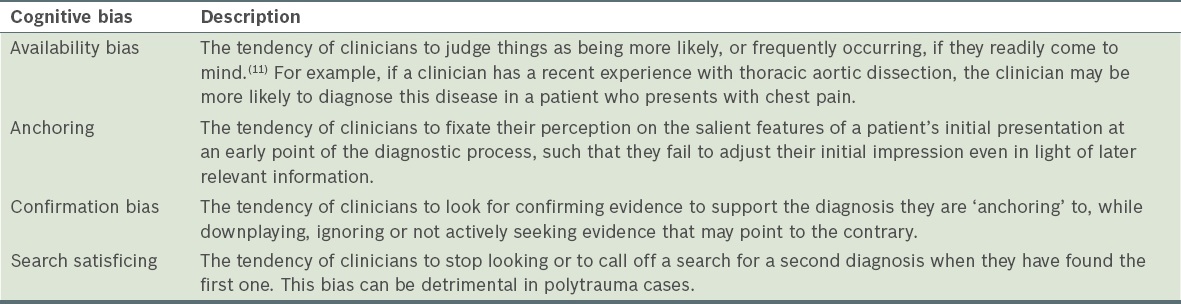

Clinical decision-making is a complex process involving interaction between Type 1 and Type 2 processes.(1,6,7) Type 1 decision-making results in fast and accurate clinical decisions, particularly if the decision-maker is an experienced clinician who is armed with the necessary knowledge, skills and experience (collectively known as ‘mindware’).(8) However, it is more affected by cognitive biases than Type 2.(6,9) Cognitive biases, defined as deviations from rationality,(10) may derail clinicians into making medical errors if they go unchecked.(9) Numerous cognitive biases have been identified, including availability bias, anchoring, confirmation bias and search satisficing.(11) A brief description of these common cognitive biases is given in

Table I

Common cognitive biases in clinical medicine.

Many strategies to reduce cognitive biases (i.e. debias) have been proposed.(11-13) A common denominator undergirding these strategies is critical self-reflection with a heightened sense of vigilance.(9,12) Metacognitive regulation (i.e. thinking about thinking) is one such strategy; it is defined as the ability to deliberately detach oneself from the immediate context in which the decision is made in order to reflect on the thinking process used.(11,12) Metacognition allows one to check for conflicting evidence and consider alternatives to the decisions made.(12)

However, cognitive debiasing is easy in theory yet difficult in practice.(11,14,15) Generally, pessimism still prevails on how best to put debiasing strategies into practice.(9,11,15) This challenge is particularly germane to clinical decision-making in a stressful environment such as the emergency department.(16) Clinicians may be more likely to use Type 1 decision-making when they are busy,(3) as it allows them to make swift, automatic and reflexive decisions. Furthermore, many of these cognitive debiasing strategies take time and slow down the entire clinical decision-making process; hence, they may be ineffective in reducing medical errors.(17) When the emergency department is not operating under stressful conditions, the clinicians theoretically have more time to analyse the situation critically to ensure that nothing of importance is missed, and vice versa. This is paradoxical, as cognitive debiasing strategies are most needed in stressful environments. Therefore, it has been theorised that the process used to effectively debias cognitive biases (which occur more commonly in Type 1 thinking) should be a Type 1 thinking process; that is, the strategy must be easily retrievable and automatised to a large degree in a stressful environment.

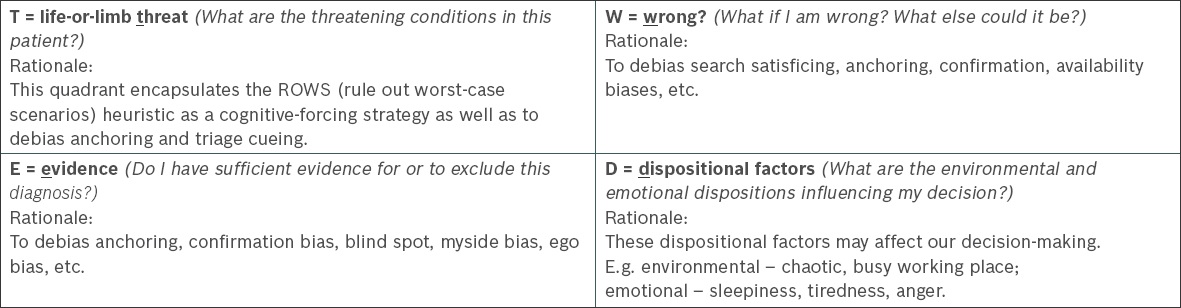

The TWED checklist (

Fig. 1

Diagram shows the TWED checklist and the potential cognitive biases it addresses.

The present study aimed to test the hypothesis that the TWED checklist facilitates metacognition among medical students so that they can make better-quality clinical decisions. This was measured by the ability of the students to generate a second, more serious diagnosis and their ability to decide on appropriate investigations and management plans.

METHODS

This study was approved by the Research Ethics Committee of Universiti Sains Malaysia, Malaysia. Two groups of final-year (i.e. Year 5) medical students from the Universiti Sains Malaysia class of 2013/14 were selected for this quasi-experimental study. The intervention group (n = 21) received educational intervention that consisted of a 90-minute tutorial on cognitive biases and debiasing strategies. The tutorial included an introduction to the dual-process theory of thinking, and a discussion on various common cognitive biases, cognitive debiasing strategies and the TWED checklist. The students in the intervention group were also given a demonstration of how to apply the TWED checklist in clinical cases. During the tutorial, the tutors emphasised that the TWED checklist is not an instantaneous solution and requires repetitive practice in a clinical setting. The control group (n = 19) was not exposed to this educational intervention. Instead, they received a 90-minute tutorial on basic electrocardiography.

A set of five clinical case scenarios was used as the assessment tool for this study. These case scenarios were designed to test the students’ ability to look beyond apparent diagnoses to generate alternative hypotheses or diagnoses. The cases were framed to lead the students to make an obvious diagnosis that was not necessarily incorrect, but was not the critical diagnosis. Apart from the clinical signs that pointed toward the obvious diagnosis in each case, other subtle clinical cues indicated the likelihood of a more urgent or life-threatening diagnosis that should be considered. In real-life situations, the failure to consider these diagnoses may be detrimental to the patient. Common potential cognitive biases were embedded in each case: availability bias in Cases 2, 3 and 4; anchoring in Case 4; confirmation bias in Cases 4 and 5; and search satisficing in all five cases.

Undergirding the construction of these cases was the theoretical notion that the students would be more likely to pick up on the alternative diagnoses if they reflected on the questions posed in the TWED checklist. Each case scenario had 2–3 questions, one question testing their ability to generate alternative diagnoses that should be considered and 1–2 questions testing their ability to make decisions on various management aspects of the case (e.g. whether certain investigations or treatment modalities were required, and whether the patient should be discharged). The maximum marks allotted to each question were made known to the students. Detailed descriptions of the objectives of the five cases, the embedded cognitive biases and how the TWED checklist can help to promote metacognition are shown in

During the first week of their emergency medicine posting, the intervention group received a 90-minute tutorial on cognitive biases and debiasing strategies (i.e. the educational intervention), while the control group received a 90-minute tutorial on basic electrocardiography interpretation. Two weeks later, the students in both groups were asked to independently and anonymously complete the test on the five case scenarios. Students in the intervention group were asked to think about their initial impressions or diagnoses before reflecting on the questions in the TWED checklist. A quiz, in the form of 20 true/false factual recall questions, was administered to both groups before they started on the test. This was immediately followed by feedback on the correct answers, although the quiz was not scored. The purpose of the quiz was to ensure that the students had the necessary knowledge to answer the questions in the case scenarios. For example, to ascertain that the students had the necessary knowledge to answer Case 1 (

The students’ responses were evaluated by two assessors who were both emergency physicians and senior lecturers. These assessors performed their evaluations independently, using a marking scheme that was provided, and were blinded to the other’s assessment of the students and the group the students belonged to. The average of the marks awarded by the two assessors was used for statistical analysis. In the event that the students gave alternative diagnoses that were not listed in the marking scheme, the assessors used their discretion to decide whether marks should be rewarded (

RESULTS

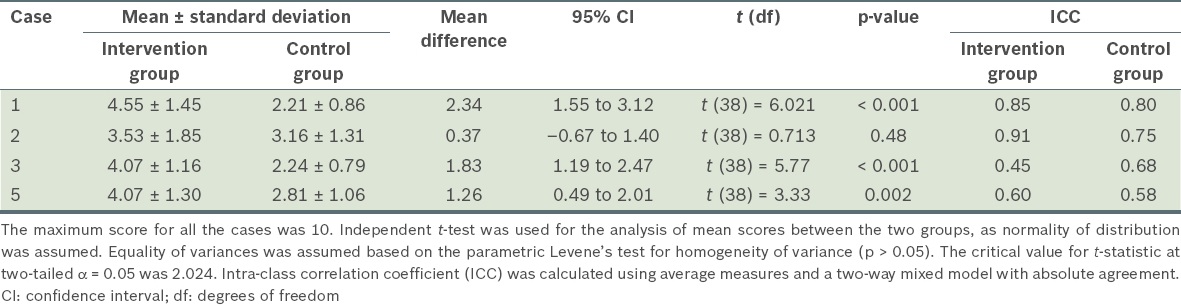

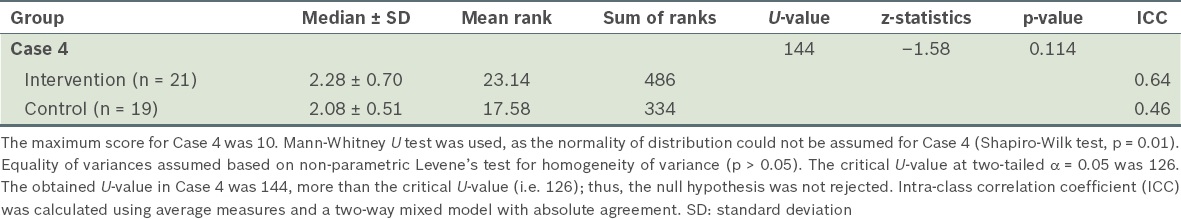

The results had good interrater agreement, with intra-class correlation coefficients of 0.93 for Case 1, 0.86 for Case 2, 0.76 for Case 3, 0.45 for Case 4 and 0.70 for Case 5. Overall, students in the intervention group scored higher in all five cases than those in the control group. An independent t-test (parametric data with z-values within ± 1.96 for kurtosis and skewness; p > 0.05 in Shapiro-Wilk test) comparing the aggregate mean scores of the students in all the five cases showed that the intervention group (mean: 18.50 ± 4.45 marks, max: 50 marks) scored significantly higher than the control group (mean: 12.50 ± 2.84 marks, max: 50 marks; t[38] = 5.01, p < 0.001). As the t-statistic value was greater than the critical value at a two-tailed alpha of 0.05 (i.e. 2.024), the null hypothesis was rejected. Detailed comparisons of the scores for each case are shown in Tables

Table II

Comparison of the mean scores of the intervention (n = 21) and control (n = 19) groups for Cases 1, 2, 3 and 5.

Table III

Comparison of the mean ranks of both the intervention and control groups for Case 4 using the Mann-Whitney U test.

DISCUSSION

The results of this study showed that educational intervention in the form of a 90-minute tutorial on cognitive biases and debiasing strategies, including introducing the TWED checklist, improves the ability of medical students to make clinical decisions. Although clinicians may try to avoid committing diagnostic errors that result from cognitive biases, this intention may not translate into an executable goal. To bridge the gap between goal intention and required action, Gollwitzer conceptualised the idea of the implementation intention.(18) An implementation intention is not the same as a goal intention; it is a predecided measure that allows the automatisation of goal intentions even in unfavourable environments (e.g. a busy and stressful environment). For example, if the intended goal is to minimise diagnostic errors secondary to cognitive biases, the implementation intention could be the use of a mnemonic checklist, like the TWED checklist, which is memorable and easily retrievable.

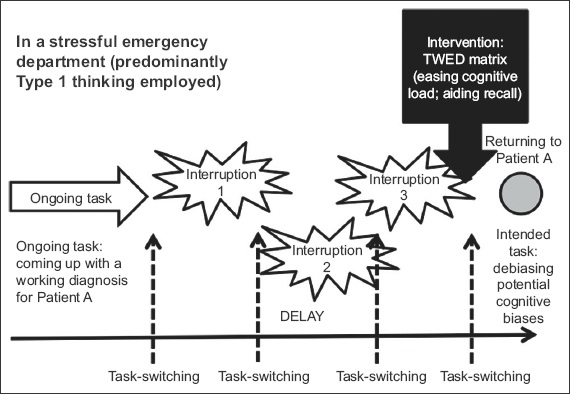

In a favourable clinical environment, metacognition can be executed with relative ease, as the clinician can afford the time and effort to do so. However, interruptions are ubiquitous in emergency departments. These interruptions often delay clinicians from executing their intention to recalibrate their thinking.(19) Interruptions impose an additional burden on the cognitive load of clinicians, as they have to switch from one task to another.(19) By the time they return to attend to their first patient after having addressed numerous interruptions, they might be distracted and forget to execute their intention. It must also be emphasised that performing cognitive debiasing does not necessarily translate into eventual improvement in diagnostic accuracy.(17) In fact, in some cases, the contrary can be true.(17) Gathering more data may slow down the entire decision-making process unnecessarily, which can be detrimental at times when emergency interventions such as cardiopulmonary resuscitation are urgently called for. This is especially the case if the process of recalling the numerous cognitive biases, identifying the cognitive biases involved and picking the right cognitive debiasing strategy is taxing to working memory.

In this regard, a mnemonic checklist such as the TWED checklist can help clinicians to perform cognitive debiasing after having addressed numerous interruptions (

Fig. 2

Diagram shows the difficulties of applying cognitive debiasing strategies in a chaotic emergency department and the point at which the TWED checklist can be applied.

The present study employed a quasi-experimental design. Although this may have weakened the internal validity of the data, we can expect the knowledge and experience of the students in both groups to be similar, as they were selected sequentially at the beginning of their Year 5 semester with four years of undergraduate experience. Additionally, all students who had progressed to Year 5 would have passed their clinical rotations when they were in Year 4 and thus met the minimum standard expected of them. Their clinical rotations included internal medicine, surgery, obstetrics and gynaecology, paediatrics, orthopaedics, and neurology and neurosurgery.

Four other pertinent limitations need to be addressed in future research on the TWED checklist. First, the methodology of the present study was not designed to objectively demonstrate that the TWED checklist had been successfully used as a cognitive debiasing strategy. Conducting direct laboratory studies on the effects of cognitive debiasing strategies is extremely challenging, as it cannot be ascertained whether any of the cognitive biases were committed by the study participants. Only the decision-makers would know if they had committed cognitive biases in their train of thought; even for those who had, admitting to it is highly subjective and contingent to the individual’s awareness of cognitive biases during the decision-making process.(17) Secondly, the Hawthorne effect should be taken into consideration.(22) The fact that students were aware that they were being observed on how they made decisions after a tutorial session would have alerted them to possible ‘traps’ in case scenarios. The challenge, therefore, is to investigate whether using the TWED checklist makes a difference in real-time clinical settings where the decision-maker is not being observed. Third, no matter how vigorous the study’s methodology, any research conducted in a classroom setting lacks the ecological validity of a complex clinical setting.(6) Mimicking the real ambient environment of a stressful clinical setting is perhaps the greatest challenge faced by researchers who seek to study cognitive biases.(6) Finally, the present study merely uses one educational intervention. It is unlikely that a one-time educational intervention with cognitive debiasing strategies is effective over a long period of time.(9) People are likely to forget. To be skilled practitioners of the TWED checklist, repetitive practice is needed. Clinical decision-making is a complex process; experience, expertise and the necessary mindware affect the quality of the decision.

The question remains whether the TWED checklist should be used as a ‘cognitive screening tool’ for every single clinical decision that clinicians make. McDaniel et al theorised that constant, prolonged exposure to a mnemonic cue offers no advantage (in aiding memory to execute intended actions) over having no cue at all.(19) For the mnemonic to be effective, it should only be used periodically.(19)

In conclusion, the results of the present study support the use of the TWED checklist to facilitate metacognition in clinical decision-making. Despite the limitations of this preliminary study, the results support further investigation into this tool to aid metacognition.

SMJ-57-699-appendix.pdf

ACKNOWLEDGEMENT

The authors would like to thank Prof Patrick Croskerry, Dalhousie University, Halifax, Nova Scotia, Canada, for his advice in the initial conception of the TWED checklist.