INTRODUCTION

Postgraduate training in the medical specialities in Singapore began transitioning from the British system to the American Accreditation Council for Graduate Medical Education (ACGME) residency programme (RP) at around 2010. Singapore became the first country outside the United States to embrace this training programme, and radiology training was one of the early adopters of this change.(1) Previously, training was likened to an apprenticeship where experience was gained ‘on the job’ and logbook documentation was akin to the keeping of a personal diary.(2-4) The learning journey was opportunistic and the contents of the logbook may not have been regularly reviewed to detect deficiencies in a timely manner.(5) The newer framework described six core clinical competencies for the trainee, now termed ‘resident’, and introduced multiple workplace-based assessment (WBA) tools to assess these competencies.(6-8) The WBA tools served as formative assessments to regularly evaluate the resident’s performance in order to give feedback and identify gaps for improvement so that they can be remedied early.(2,3,9,10) The three tools that our programme utilises are: (a) the mini-clinical examination (CEX) applied to reading of radiographs or interpretation of computed tomography images; (b) directly observed procedure (DOP) for a fluoroscopic or interventional radiology task; and (c) the Global Performance Assessment (GPA) form at the end of a rotation.

In 2013, the ACGME rolled out Milestones as part of the Next Accreditation System (NAS).(11,12) It expanded upon the six core competencies, resulting in additional sub-competencies.(11,13) In line with this movement, radiology emerged, once again, as one of the early responders, and we are currently in the process of developing local Milestones. This makes it a good time to reflect on the performance of our raters thus far in using WBA tools. Presently, only a handful of articles have reported on the local experience in the RP and even fewer dealt with the specialty of radiology.(1,4,5)

This research aimed to determine if our WBAs have been undertaken appropriately, by using the GPA form (Appendix) as a proxy, for the following three reasons. Firstly, the GPA form is one of the most frequently used WBA tools and purports to assess all the six core competencies.(7-8) Secondly, it is a common form completed for every resident in each rotation, unlike a DOP that would be used for an intervention radiology posting or a mini-CEX that would be utilised for a general body posting. Thirdly, it is the one used by the widest range of raters and hence can be a good gauge of our specialists.

METHODS

The population of this study included all residents enrolled in the Radiology RP of one of the three accredited radiology programmes in Singapore. Our study involved the retrospective review of secondary data collected since its inception in July 2011 until July 2017. The training period for radiology spans five years and every resident undergoes three-month-long postings during this time. Each resident is assessed using the GPA form at the end of each three-month posting (or after six months if he/she stays for two consecutive postings in the same hospital). The programme director nominates one rater, a qualified radiologist, to undertake the assessment and complete the entire form. The radiologist may be part of the core or clinical faculty, the former being an official appointment, and is granted protected time for RP duties. All the core faculty and the majority of the clinical faculty have undergone WBA training, but in the utilisation of the mini-CEX and not specifically the GPA. The selection of the rater was based on Radiology Information System/Picture Archiving and Communications System data indicating which radiologist had the greatest number of interactions with the resident during that posting. After being assigned, raters are given up to two weeks to deliberate before submitting their assessment.

The GPA form for radiology was derived after making a few modifications to a generic form used by the sponsoring institution. Among all the question items, item Q22 epitomises the complexity of translating clinical criteria to make them applicable to radiology. As such, the equivalent targets could be interpreted as the ability of the resident to understand the gist of the clinical problem, his/her accuracy and speed of reporting, and timeliness in informing the clinician of a critical finding. The form consisted of 22 question items that assessed the six core competencies. Each item was marked on a 9-point Likert scale. There was an option for not giving a score and instead selecting ‘Not applicable/not observed’ (NA/NO), with no distinction made between the two. There was also a space below the 22 items for comments, which allowed the rater to give qualitative feedback.

We investigated our raters using descriptive statistics and the dimensionality of assessment by employing exploratory factor analysis (EFA). Analysis was performed using IBM SPSS Statistics version 24.0 (IBM Corp, Armonk, NY, USA). Ethics approval was granted by the National Healthcare Group Domain Specific Review Board.

RESULTS

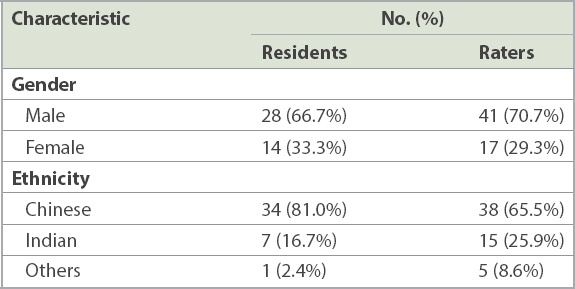

A total of 297 valid GPA forms were collated from all 42 of our residents to ensure a good sample size in this niche speciality. Two batches totalling 15 residents had graduated from the five-year programme and had been assessed on 9–14 occasions each. There were 58 raters who conducted an average of 5.1 assessments each (

Table I

Demographics of the 42 residents and 58 raters.

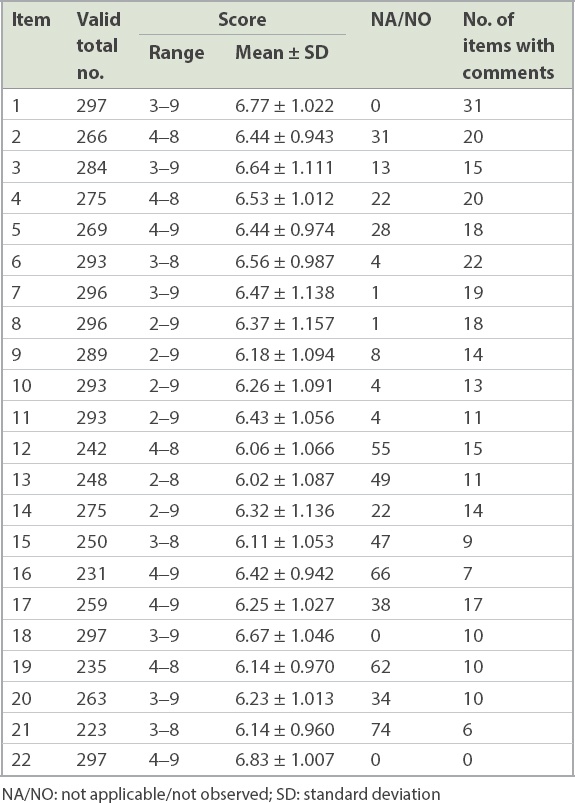

There were no invalid or missing answers to the 22 items (

Table II

Total number of valid responses, score range, NA/NO responses and available comments of the 22 items.

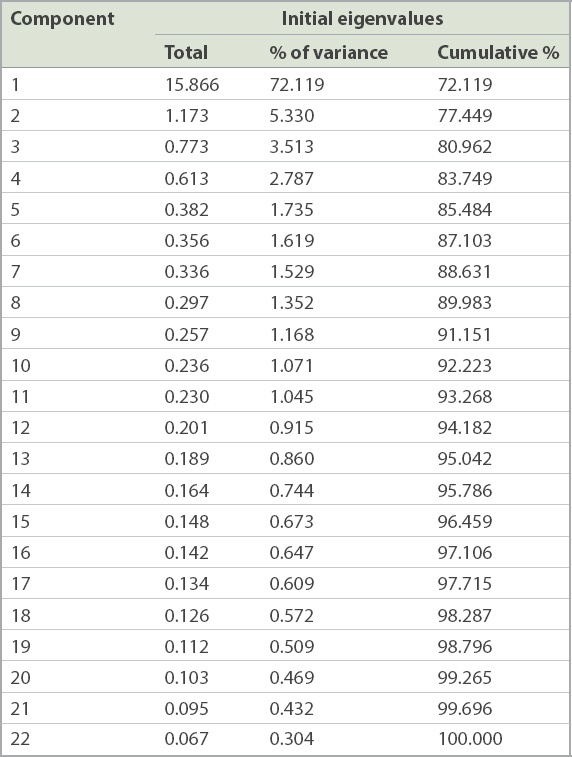

Table III

Exploratory factor analysis of the 22 items in the Global Performance Assessment form.

DISCUSSION

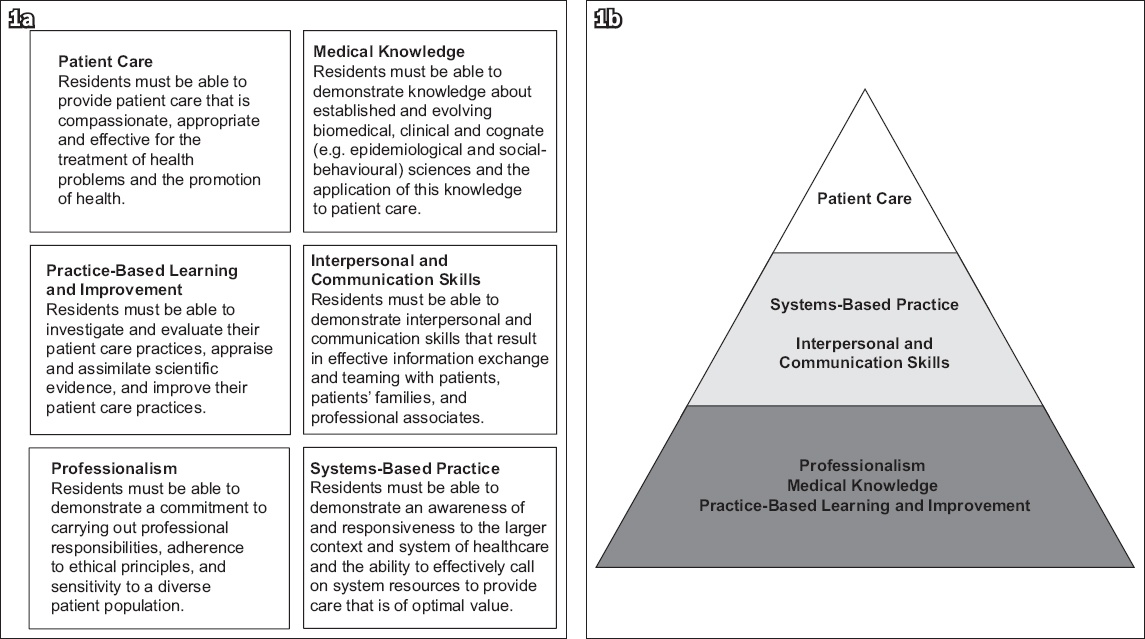

Although the ACGME has given equal weightage to the six core clinical competencies, the literature has revealed that raters have been assessing based on a one- or two-dimensional perspective, with two broad components reflecting either the competence or friendliness of the resident.(6,8) The hierarchical layering described by Khoo et al, which places patient care as the most important competency, may explain the single dimension in our local assessment. It is based on the belief that this competency encompasses the knowledge and skills of the other five (

Fig. 1

Diagrams show (a) the six core clinical competencies as defined by the Accreditation Council for Graduate Medical Education, where each component is given equal weightage and (b) the hierarchical model proposed by Khoo et al,(1) which places the patient care component at the apex of a layered pyramid.

A rather similar trend, termed straight-line scoring (SLS), has been reported when using Milestones in the ACGME system.(14) One reason cited for SLS is that raters generalise the ratings of one sub-competency to the remaining ones in the same section.(14) It may be due to lack of granularity in differentiating between the sub-competencies or an attempt by raters to simplify work, seeing that in Milestones, the initial six competencies are subdivided into multiple smaller components and the number of required assessments has increased. Given that we are in the development phase for local Milestones, we should aim to avoid this potential problem by crafting our sub-competencies so that they remain as distinct entities. Subsequently, training should emphasise to raters the importance of assessing each sub-competency on its own merits.(14)

Our results show that raters exhibited a central tendency effect, some with additional restriction of range. This could be an influence of the British model, which utilises a close marking system that follows a bell-shaped distribution and rarely gives marks at either extreme.(15,16) Only two raters used Likert scores of < 4, which may be another sequela of the close marking system that aims to avoid giving too low a score in one category so that the resident will have an opportunity to make amends in another category in order to pass.(15)

As our residents still sit for the Royal College fellowship examinations in their third or fourth year of training, the British influence endures, and more research is necessary to confirm or refute its secondary effects on the raters. Furthermore, with the electronic version of our GPA form, giving a failing mark requires raters to justify themselves by providing comments before the form can be successfully submitted. Artificially tweaking marks to ensure that a resident scrapes through with a pass would circumvent this time-consuming chore. Lastly, the absence of criterion standards or behavioural descriptors representing each Likert score in our GPA form may partly explain these rater effects.(17) Milestones attempts to overcome this by having detailed descriptive narratives for each competency and even sub-competency.(11) Furthermore, it includes a timeline that trends proficiency levels from novice to beginner, then to competent, followed by proficient, and finally expert.(11) Despite these modifications, exaggerated marking has still been reported when using Milestones.(12) Generalised inflated scoring may result in an inability to discriminate between residents in different levels of training and, thus, yields no useful information.(12) Hence, improvement in form design should occur parallel to rater training.(11,12) While we advance towards Milestones, concurrent rater training is necessary to ensure that they adhere to the marking rubrics, lest inappropriate usage of these forms clouds future Milestone data.

Another issue with the current GPA form is that no distinction is made between the NA/NO scores, resulting in a reduced number of valid responses for certain items. The ‘NA’ option can provide raters with an avenue to abstain from giving a committed response, especially if it meant failing the resident.(10,12) The ‘NO’ option is more specific and reserved for rare events that are not observed during the period being assessed.(12) Very few NA/NO checkboxes were ticked for items Q7–Q10, which is in the Medical Knowledge section, as these were more tangible and easily graded by raters. On the contrary, a high percentage (> 20%) of NA/NO responses were given for items Q16 (under Patient Care) as well as Q19 and Q21 (under Systems-Based Practice), as these items appear more abstract to evaluate but are nonetheless still applicable, as they represent core clinical competencies. Future form design should distinguish ‘NA’ from ‘NO’ in order to determine if it is a case of ‘failure to fail’ or a rare scenario not observed during that posting.(10) Simultaneously, rater training should be targeted at interpreting behavioural descriptors properly so that fewer ‘NA’ marks are awarded.

The privilege of providing qualitative feedback was underutilised at only 4.7%, compared to rates quoted in the literature of up to 50%.(18) Although this may be an indirect consequence of not wanting to fail a resident and avoiding extra commentary, there could be another reason. Assuming that raters had a traditional mindset, additional comments, especially in a summative assessment, were generally assumed to be negative and rarely given for fear of jeopardising the resident.(19) Although this WBA is undertaken at the end of the posting and may appear summative, it is nevertheless formative because there are more postings yet to come. Raters should be reminded to provide good qualitative feedback instead of one-sided criticism, as the intention is to guide the resident to improve for future rotations.

The main limitation of this study is that it is descriptive. Hence, complementary qualitative research would help to elucidate whether our raters were still influenced by British practice and/or were merely inexperienced in navigating the new ACGME forms. The other limitation lies with employing the GPA form as a proxy for our other WBAs. It is less specific when compared to the DOP or min-CEX.(13) This form is usually completed at the end of the rotation and records one’s impression of the resident over a period of time and not for a specific task. WBAs are better graded at or around the time of assessment, and this retrospective completion introduces lapses during recollection of events.(20) Some items may be given unfair weightage due to the ‘recency effect’, where the most current performance of the resident will have a higher bearing in the eyes of the rater, while responses to other items may be limited to selective recall or secondhand information.(6,7,9)

In conclusion, radiology training in Singapore is unique, given its a long association with the Royal College and the recent move to pursue ACGME’s RP and NAS. The results of this study reveal that our radiology training is still traditional and tends to focus more on tangible competencies such as medical knowledge and how the resident communicates with fellow healthcare professionals. However, it is not impossible to straddle both new and old systems as long as we keep the criteria of each one separate and adopt distinct mindsets when performing their respective assessments. As we transit towards using Milestones, improvements in form design and concurrent rater training are even more crucial than ever.

ACKNOWLEDGEMENTS

We would like to thank Dr Lye Che Yee, PhD, School of Education, University of Adelaide, Australia, for her statistical advice and assistance.

SMJ-62-149-Appendix.pdf